Hand Gesture Recognition for Deaf people in matlab

Abstract: This project presents a prototype system that helps

in recognizing the hand gesture of a special people (Deaf people) and

in order to communicate more effectively with the normal people.

The problem addressed is based on Digital Image Processing

using Skin Detection, Image Segmentation, Image Filtering, and

cross-correlation method. This system expects to achieve

recognizing gestures of ASL (American Sign Language) by

special people and converting into speech.

I. INTRODUCTION

In this project, we are recognizing gestures made by a person using the webcam of a special person and converting the gesture into respective text and then this text into speech.

Actually, we are using web camera for capturing gestures and techniques like skin detection, cross-correlation, Image segmentation to crop the required part of the image but the illumination in the picture will not good enough. so, we are using the gray algorithm for illumination compensation in that picture.

Around 500,000 to 2,000,000 speech and hearing impaired people express their thought through Sign Language in their daily communication. These numbers may diverge from other

sources but it is most popular as mentioned that the ASL is the 3rd most-used sign language in the world.

II. WHAT IS ASL?

ASL (American Sign Language) is a language for hearing impaired and the deaf alike people, in which manual communication with the help of hands, facial expression and body language are used to convey thoughts to others without using sound. Since ASL uses an entirely different grammar and

vocabulary, such as tense and articles, do not use “the”, therefore, it is considered not related to English. ASL is generally preferred as the communication tool for deaf and dumb people.

III. PROPOSED APPROACH

To satisfy and reduce the computational effort needed for the processing, pre-processing of the image taken from the camera is highly important. Apart from that, numerous factors such as lights, environment, the background of the image, hand and body position and orientation of the signer, parameters and focus the of camera impact the result dramatically.

Steps:

A. Taking input:

Firstly, we capture the gesture part a hand from input video by using a frame with specified boundaries and cropping the image. So that, the cropped image contains the only arm which shows the gesture and we use linear image filtering for enhancing the image like smoothening, sharpening and edge enhancement.

B. Image refining:

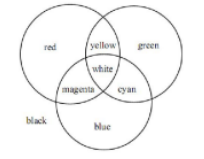

We apply a gray world algorithm to compensate illumination which helps in detecting skin. The RGB color components represent the incoming light, that is the brightness values of the image that can be obtained through (Red, Green and Blue filters). The main purpose of Color segmentation is to find particular objects for example lines, curves, etc in images.  In this process, every pixel is assigned in an image in such a way that pixels with the same label share certain visual characteristics.

In this process, every pixel is assigned in an image in such a way that pixels with the same label share certain visual characteristics.

C. Detecting skin pixels:

There are several techniques used for color space transformation for skin detection. Some potential color spaces that are considerable for skin detection process are:

a) CIEXYZ

b) YCbCr

c) YIQ

d) YUV

A performance metric that the other colorspaces have used is scattered matrices for the computation of skin and non-skin classes. Another drawback is to comparison through the histogram of the skin and non-skin pixel after transformation of color space.

The YCbCr, color space performs very well in 3 out of 4 performance metrics used. Thus, it was decided to use YCbCr color space in skin detection algorithm. In YCbCr color space, the single component “Y” represents luminance information, and Cb and Cr represent color information to store two color-difference components, Component Cb is the difference between the blue component and a reference value, whereas component Cr is the difference between the red component and

a reference value.

Code snippet to convert from RGB to YCbCr:

imgycbcr = rgb2ycbcr(Img_gray);

YCb = imgycbcr(:,:,2);

YCr = imgycbcr(:,:,3);

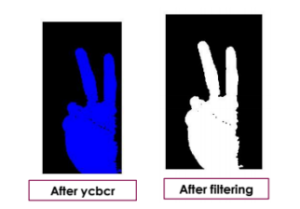

After applying the gray-world algorithm, we can convert the RCB component to YCbCr component which simplifies detecting skin and non-skin. Actually, in this process, we are converting three components into the single component using YCbCr. Then, we mark the skin part with blue color. We also apply a filter like median-filter which eliminate noise in the image. After all this, we resize the image with a fixed size which helps in comparing efficiently. In this case, we took 256*256 as a fixed size.

Code Snippet to Detect Human Skin:

[r,c,v] = find(YCb>=77 & YCb<=127

& YCr>=133 & YCr<=173);

numind = size(r,1);

D. Cross-Correlation technique:

Cross-correlation is used to compare the intensities of the pixels. we apply cross-correlation between the image we refined from input video and all other images in our database.

We store the top ten images for which the corr2() value is higher. Then, the topmost image in that file which has the highest corr2() values states that it has more similarity with the input

image. Finally, we are done with recognition of the image and the respective character was stored.

E. Text to Speech conversion:

For the above-matched image, the respective character is ‘V’.We add this character into a file for further reference. Finally, we convert text into speech. This will be our final output.

IV. DATASET & EQUATIONS

As we are using American sign language for recognizing the gesture. Our dataset contains should contain all 26 types of gestures. For improving the efficiency, we took about 260 images such that around 10 images for a type of sign-gesture.

The following image shows the 26 types of ASL gestures.

We are using around 160 images for now in our database.

V. CONCLUSION:

The statistic of the result of the implementation, it is therefore concluded that the method is used for cross-correlation and color segmentation work with some less accuracy with hand gesture recognition. The results obtained are applicable and can be implemented in a mobile device smartphone having

a frontal camera. However, some issues were found with time and the distance at which we take input from the video.

Source code:

For source code follow the link : https://github.com/imkalyan/Hand-Gesture-Recognition

15 Comments

Marquis Geiken · December 26, 2021 at 1:05 pm

Hi there! I just want to offer you a big thumbs up for the great information you’ve got right here on this post. I’ll be returning to your web site for more soon.

joker123 · December 30, 2021 at 9:32 pm

wonderful publish, very informative. I wonder why the other experts

of this sector do not realize this. You should continue your writing.

I’m confident, you’ve a great readers’ base already!

hemes · December 31, 2021 at 8:51 pm

Best PHPScript

moralace · January 2, 2022 at 3:40 am

I’m curious to find out what blog platform you are utilizing?

I’m experiencing some small security issues with my latest blog and I would like to find

something more secure. Do you have any solutions?

reina · January 2, 2022 at 11:38 pm

It’s remarkable to go to see this site and reading

the views of all friends on the topic of this post, while I am

also zealous of getting experience.

Denton · January 3, 2022 at 9:52 pm

My family every time say that I am wasting my time here at net, but

I know I am getting knowledge all the time by reading

thes nice posts.

Adelaide · January 4, 2022 at 4:39 pm

you’re in reality a good webmaster. The site loading speed is incredible.

It sort of feels that you are doing any unique trick. Moreover,

The contents are masterpiece. you have performed a wonderful

process in this matter!

manuelasummer · January 11, 2022 at 2:30 am

Wow that was odd. I just wrote an incredibly long comment

but after I clicked submit my comment didn’t appear.

Grrrr… well I’m not writing all that over again. Anyway,

just wanted to say great blog!

fiberglass · January 15, 2022 at 2:42 am

Thanks for finally writing about > Hand Gesture Recognition for Deaf people in matlab < Liked it!

website-design brownwood · January 16, 2022 at 1:32 am

I’m not sure exactly why but this web site is loading very slow for

me. Is anyone else having this problem or is it a issue

on my end? I’ll check back later and see if the problem still exists.

Persian kitten · January 20, 2022 at 10:32 am

I am really happy to glance at this blog posts which carries tons of valuable

data, thanks for providing these statistics.

charlotte · January 23, 2022 at 5:28 pm

Just desire to say your article is as astounding. The clearness in your post is just spectacular and i could assume you

are ann expert on this subject. Well with your permission let me to grab your

feed to keep up to date with forthcoming post.

Thanks a million and pleaqse carry on the rewarding work.

idnpoker · January 27, 2022 at 7:53 pm

I am really grateful to the holder of this site who

has shared this enormous article at at this place.

judi slot osg · January 30, 2022 at 3:41 am

Hello to all, how is all, I think every one is getting

more from this web page, and your views are good designed for

new viewers.

joker123 · January 31, 2022 at 4:54 am

Hi! I’m at work surfing around your blog from my new iphone!

Just wanted to say I love reading your blog and look forward

to all your posts! Carry on the great work!